Distinct Proxy Providers for the High-performing Web Scraper

Within the fast-paced world of web scraping, the importance of dependable proxy sources cannot be underestimated. As scrapers seek to streamline data extraction from multiple websites, having the right proxies can represent the difference between success and failure. Proxies not only help in overcoming geographical restrictions but also play a vital role in preserving anonymity and facilitating efficient scraping operations. With a plethora of options available, it can be challenging to find unique and effective proxy sources that cater specifically to the needs of competitive web scrapers.

This article, delves into the diverse landscape of proxies tailored for web scraping. From comprehending the nuances of HTTP and SOCKS proxies to utilizing powerful tools for proxy scraping and checking, we will explore how to recognize and obtain the best proxy sources. Whether you are looking for free or paid solutions, premium proxies that ensure top-notch speed and anonymity are essential. Come along as we reveal creative methods for scraping proxies, the top tools for verification, and tips for navigating the proxy ecosystem effectively in 2025 and the future.

Comprehending Proxies Types

Proxies serve as middlemen between a user and the internet, and understanding the various types of proxies is crucial for efficient web data extraction. The predominant categories are HTTP, HTTP Secure, and SOCKS proxies. Hypertext Transfer Protocol proxies are tailored specifically for internet traffic and can handle requests for websites. HTTP Secure proxy servers are similar but provide an extra level of security through encryption. SOCKS proxy servers, on the contrary, can handle any type of traffic, making them versatile for diverse applications beyond only internet inquiries.

When delving further into proxy categories, it is essential to distinguish between SOCKS4 and SOCKS5 proxies. SOCKS4 offers fundamental features for TCP connections and is suitable for reaching websites and applications with limited features. Socket Secure 5 enhances this by supporting both Transmission Control Protocol and UDP, permitting for a wider scope of uses, including those involving streaming video and online gaming. It also permits authentication, adding an extra level of protection and management, which is advantageous when conducting delicate data extraction operations.

Additionally, proxies can be categorized into public and private proxy servers. Public proxy servers are available for free and can be utilized by any users, but they often come with reliability and speed issues, making them suboptimal for professional scraping. free proxy scraper are dedicated to a single individual or entity, ensuring superior performance, greater speeds, and improved anonymity. This difference is significant for web data extractors who value quality data and functional effectiveness.

Paid versus Free Proxies

As considering proxies for web scraping, it's essential to weigh the advantages and drawbacks of free versus premium options. No-cost proxies frequently attract users due to their zero cost, making them an appealing choice for users or small projects with tight budgets. However, these proxies may have significant drawbacks, including slower speeds, unreliable uptime, and potential security risks. A lot of free providers also do not guarantee anonymity, leaving users exposed to detection and potentially resulting in blocked IPs.

Conversely, paid proxies come with advantages that can be crucial for serious web scraping efforts. Paid services typically offer higher speeds and more dependable connections, along with personalized support and enhanced security features. Furthermore, they often provide a range of options, including residential and corporate IP addresses, which can help in bypassing geo-restrictions and accessing a wider array of data sources. Investing in paid proxies can lead to a more streamlined and successful scraping process.

Ultimately, the choice between free and paid proxies depends on the scale of your scraping needs and your readiness to invest in tools that enhance performance and reliability. For light use, free proxies might suffice, but for extensive or aggressive data extraction, the benefits of paid proxies are likely to exceed the costs, ensuring a more seamless and more successful scraping experience.

Best Proxy Sources for Web Scraping

When discussing web scraping, utilizing reliable proxy sources is vital to guarantee both speed and anonymity. One of the top sources for proxies is specialized proxy providers, which offer a variety of plans that accommodate various needs, from single-user packages to business solutions. Providers like ProxyProvider offer a selection of high-quality proxies that are optimized for scraping. These proxies usually feature high uptime and offer rotating IP addresses, reducing the chances of bans or throttling during data extraction.

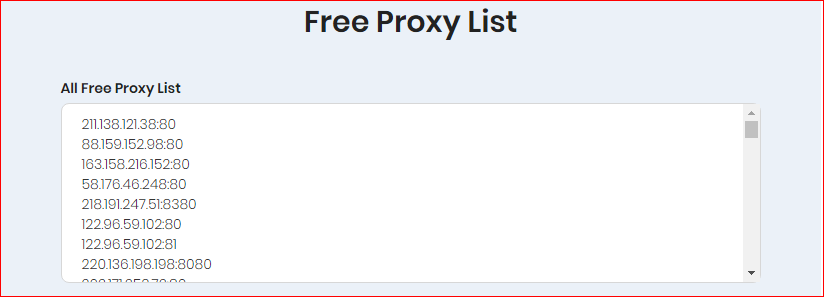

Another excellent option for sourcing proxies is complimentary proxy lists available online. Websites that aggregate and update lists of free proxies often update them regularly, allowing users to find fast and functional proxies without cost. However, free proxies can carry risks, such as reduced speeds and exposure to unreliable servers. Users must verify the proxies' performance using a robust proxy checker, making sure they can handle the load and meet the anonymity needs for successful web scraping.

For those looking to implement a more technical solution, proxy scraping with Python can be an effective approach. Using libraries like BeautifulSoup or Scrapy, developers can create custom scripts to fetch and test proxies directly from online sources. This method allows for a tailored approach, enabling users to filter for speed, anonymity levels, and geographical location, eventually leading to better results in web scraping projects.

Proxy Server Scraping Resources and Techniques

In terms of collecting proxy servers for web scraping, employing the appropriate tools is important. A selection of proxy scrapers are available, spanning free options to more advanced services. Free proxy scrapers are favored among newcomers, providing a simple way to collect proxies from open lists. However, for better scraping, using a efficient proxy scraper can improve the velocity and reliability of your scraping efforts. Programs such as ProxyStorm are notable for their extensive features and user-friendly interfaces, making them a leading selection for those dedicated about web scraping.

To confirm the proxies you harvest are reliable, it is crucial to use a proxy checker. The best proxy checkers enable users to not only check whether a proxy is working but also assess its performance and privacy. Grasping the difference between HTTP, SOCKS4, and SOCKS5 proxies can help you take informed choices based on your particular needs. Reliable proxies can dramatically affect the success of your web scraping tasks, allowing you to navigate websites without being detected.

In addition to employing scrapers and checkers, learning proxy scraping with Python can provide flexibility. Python libraries allow users to automate the process of scraping proxies and confirming their performance. By leveraging these methods, you can find high-quality proxies that fit your needs, whether for personal or shared use. Exploring various SEO tools with proxy support can improve your scraping power and ensure you have access to proxy sources in the field.

Assessing Proxies Anonymity and Performance

When using proxy servers for web data extraction, it's crucial to confirm that they offer both privacy and speed. An disguised proxy conceals the user's IP address, which makes it hard for websites to monitor their activities. This is especially crucial when collecting data from competitive sources that may have systems in position to prevent or limit access to bot queries. To check a proxy's level of privacy, you can utilize online tools that show your IP address before and after connecting through the proxy. If the IP address switches and stays concealed, the proxy is deemed untraceable.

Performance is a further key aspect when determining proxy servers for data extraction. A lagging proxy can greatly hinder the efficiency of your scraping operations, leading to setbacks in data gathering. To check proxy performance, consider using dedicated tools or scripts that assess the response times and response times of your proxies. In addition, conducting speed tests at various intervals of the day can offer a glimpse of their performance under various internet conditions.

Achieving the right equilibrium between privacy and performance is crucial. Some proxies may thrive in privacy but fall behind in speed, while some may offer quick response speed but reveal your IP. To improve your data extraction efforts, maintain a curated list of validated proxies, regularly checking their anonymity and performance to make sure they remain effective for your requirements. Employing a reliable proxy verification utility will facilitate this process, helping you maintain an efficient proxy directory that supports your web scraping goals.

Utilizing Proxies for Automation

In the realm of automation, proxy servers play a vital role in supporting efficient operations. When executing internet-based tasks, such as information extraction, social networking administration, or content posting, relying on a single IP address can cause restrictions and blocks from target websites. By leveraging proxy servers, you can distribute your requests across multiple IP addresses, which assists to preserve privacy and prevents overloading any individual connection. This is especially important when dealing with platforms that have rigorous rate limits.

Additionally, proxy servers facilitate automation scripts to replicate human behavior more efficiently. For instance, when performing actions such as authenticating, navigating, or scraping data, a script using dynamic proxies can emulate a genuine person’s browsing session. This lowers the chance of being identified as automated traffic. Proxy solution providers often supply features that enable users to pick specific geographic areas, which can additionally boost the pertinence of the information and improve the chances of successful interactions with websites.

Moreover, employing proxy servers can considerably boost the velocity and efficiency of automated tasks. A high-speed proxy scraper can provide a list of high-performance proxies that deliver fast connections. This is crucial when urgent automation is necessary, such as bidding online auctions or scrapping data for stock transactions. Integrating a reliable proxy checker within your automation workflow guarantees that only operational proxies are utilized, thus enhancing overall efficiency and outcomes while minimizing mistakes during implementation.

Search Engine Optimization Tools with Proxy Server Assistance

When it comes to SEO, utilizing proxy servers can significantly enhance your strategies. Various SEO tools, including keyword research platforms, rank monitoring services, and web crawlers, often require effective proxy solutions to avoid IP bans and gather data efficiently. By incorporating proxy servers, you can scrape search engine results without being flagged, ensuring continuous access to valuable insights that drive your optimization efforts.

Additionally, tools that support proxy connections can facilitate data collection from various geographic locations. This is crucial for understanding how your website ranks in various regions and helps tailor your SEO approaches accordingly. Services like ProxyStorm and others allow you to configure your proxy server settings, enabling smooth operation and supporting multiple requests, which is important for comprehensive data analysis.

Employing proxy servers within SEO resources also helps maintain anonymity and confidentiality. This is particularly vital when conducting competitor analysis or scraping competitors’ websites. By using reliable proxy server support, you can gather necessary data without exposing your IP address, thereby safeguarding your approaches and ensuring that your web scraping processes run smoothly.