Quickest Proxy Server Extractor Secrets: Tips for Speed and Effectiveness

In the dynamic world of web scraping and data extraction, having a trustworthy proxy setup is essential for maintaining speed and effectiveness. Proxies play a key role in allowing users to circumvent restrictions, safeguard their identity, and manage multiple requests at once. Nonetheless, not all proxies are created the same. Learning the details and aspects of proxy scraping and checking can considerably improve your web scraping projects, permitting you to capitalize on the abundant resources available online.

In this article will disclose the quickest proxy scraper tips, offering valuable guidance and tools that will streamline your proxy acquiring and validating processes. From understanding the variations between HTTP and SOCKS proxies to investigating the best free proxy checker options for 2025, we will cover necessary strategies to aid you find and verify high-quality proxies. Whether you are seeking to streamline your tasks, gather data from multiple sources, or just test proxy identity protection, this extensive guide will equip you with the knowledge you need to succeed in your proxy management efforts.

Understanding Proxy Varieties

Proxy servers serve as bridges between individuals and the internet, facilitating demands and replies. There are various types of proxies, each with unique features and application cases. The most frequent types include Hypertext Transfer Protocol, HTTPS, and SOCKS proxies. HTTP proxies work specifically for internet traffic, allowing users to browse websites, while Hypertext Transfer Protocol Secure proxies provide a secure connection by securing the information. SOCKS proxies, on the contrary, can handle any type of traffic, making them versatile for multiple protocols other than just browsing the web.

When looking into proxies, it's important to understand the differences between Socket Secure 4 and SOCKS5. SOCKS4 is a more basic version that does not include authentication or Internet Protocol version 6, which can restrict its use in current applications. SOCKS5, in contrast, adds support for authentication, UDP, and IPv6, rendering it a superior choice for users requiring flexibility and safety. Understanding these variations is essential for choosing the appropriate proxy kind for particular tasks, particularly in data extraction and automation.

Another significant distinction is between personal and public proxies. Shared proxies are accessible by all and are usually free; however, they frequently come with slower speeds and higher risks of being unreliable or banned due to abuse. Private proxies, often paid, are exclusive to the user, providing superior performance, reliability, and anonymity. Choosing between private and shared proxies will vary according to your needs, whether it's for informal browsing or intensive data collection tasks.

Methods for Optimal Proxy Scraping

To boost the performance of your proxy harvesting, employing multiple threads can greatly enhance velocity. By taking advantage of concurrency, you enable your scraper to make numerous requests at once, thus reducing the time required to compile a thorough proxy list. Tools like Python’s asyncio or libraries such as Scrapy enable effective handling of numerous connections, guaranteeing that your harvesting process is both swift and efficient.

Another important technique is to target high-quality providers for your proxies. Look for websites or databases known for offering reliable and regularly updated proxy lists. Free proxies are often inefficient and unreliable, so it might be beneficial investing in premium services that offer verified proxies. Additionally, evaluating the source's reputation in the web scraping community can help you determine its trustworthiness.

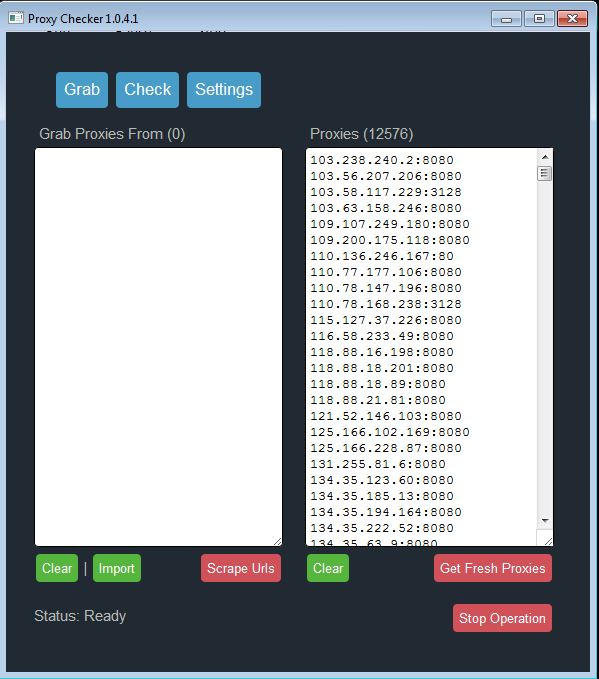

In conclusion, consistently incorporating a verification tool into your scraping routine is crucial. By using a proxy checker, you can remove non-functional or slow proxies rapidly. This action not only preserves time by concentrating on high-performing proxies but also makes sure that your web scraping tasks run smoothly without disruptions caused by failed connections or blocked requests. spintax ## Checking Proxies Speed and Privacy

As employing proxies for internet scraping and automation, it’s crucial to assess both speed and security to guarantee the best results. Proxy performance influences how quickly you can navigate the web and manage big data requests. To test the speed of a proxy, a standard method is to measure the response time through easy requests to multiple websites. Applications like Proxy Checker can streamline this task, allowing you to quickly determine which proxies perform best under your specific needs.

In addition to performance, testing proxy server privacy is essential for security and anonymity. There are three primary types of proxy anonymity levels: transparent, hidden, and premium proxies. Transparent proxies disclose your IP address to the websites you access, while anonymous proxy servers hide your IP yet may still indicate that you are using a proxy server. Premium proxy servers provide complete anonymity, making it difficult for websites to identify proxy server use. A reliable proxy server checker can assist in confirming the anonymity status of your proxy servers, guaranteeing you choose the right ones for critical tasks.

Ultimately, frequently testing & assessing proxy server speed & anonymity not just enhances your web data extraction performance but also safeguards your personal information on the internet. Using a quality proxy server validation tool will conserve you time and allow you to concentrate on information gathering stress-free. By grasping the importance of these aspects, you can build a better plan for proxy handling in your web scraping tasks.

Premier Proxies Sources for Web Data Extraction

When it comes to obtaining proxies for web scraping, stability and performance are essential. One of the top options is to utilize specialized proxy services that specialize in offering premium proxies crafted for scraping. These services typically supply both HTTP and SOCKS proxies, ensuring adaptability for different scraping criteria. Providers like Luminati and Bright Data are renowned for their vast proxy networks, offering location-based proxies that are perfect for overcoming geographical limits while scraping content.

Another great source of proxies is open proxy lists available online. Several platforms collect and refresh proxy addresses from various locations. While these can be a wonderful way to find no-cost proxies quickly, the quality can differ significantly. It is vital to employ a reliable proxy checker to verify the speed and privacy of these proxies. Websites like FreeProxyList and ProxyNova can be helpful, but keep in mind that no-cost proxies may often become unreliable due to frequent downtime or banning.

Lastly, for those who want more control and privacy, setting up your own proxy server could be the best approach. This requires renting a VPS and configuring it to act as a proxy. Using applications like Squid or Nginx enables you to create a custom proxy that meets your specific needs. This approach ensures a reliable and secure proxy network, allowing for enhanced efficiency during web scraping projects.

Free vs Paid Proxies: A Side-by-Side Examination

When considering proxy servers for scraping the web, one of the key decisions users face is whether to choose free and paid services. Complimentary proxies are freely obtainable and can seem attractive for endeavors on a limited budget. However, they often come with downsides such as slower speeds, variable performance, and higher chances of being blocked by websites. how to use proxies for automation are hosted on shared servers, leading to vulnerabilities and inconsistent connections that may hinder scraping efforts.

On the other hand, premium proxies offer numerous advantages that enhance both speed and efficiency. They typically guarantee faster connection speeds, more stable IP addresses, and superior overall performance. Premium services often provide help, which can be crucial when solving issues. Furthermore, premium proxies are more likely to offer privacy and protection, making them ideal for critical data extraction tasks where consistency is of utmost importance.

At the conclusion, the decision between complimentary and paid proxies should align with the particular needs of the endeavor. For occasional tasks or minor scraping tasks, complimentary proxies may be adequate. However, for professional data extraction, competitive analysis, or activities requiring consistent uptime and speed, investing in a premium proxy service is often the more wise choice, guaranteeing access to top-notch, reliable proxies that enhance the data collection process.

Tools and Scripts for Proxy Scraping

As regarding proxy scraping, several tools and scripts could substantially enhance the efficiency and speed. Proxy scrapers can be essential for gathering a large and varied list of proxies. web scraping proxies free vs paid include ProxyStorm, offering a powerful platform for both HTTP and SOCKS proxy scraping. Additionally, numerous users utilize open-source tools written in Python, allowing for tailoring and flexibility in gathering proxies from various online sources.

For individuals looking for quick and user-friendly solutions, you can find numerous free proxy scrapers available. These tools typically include preconfigured settings to scrape from well-known proxy lists and guarantee that users can find proxies without in-depth technical knowledge. However, it can be crucial to consider the quality of the proxies scraped, as many free resources may include unreliable or slow proxies. Utilizing a combination of free and paid services can yield the best results in terms of speed and reliability.

Once you have gathered your proxies, employing a proxy checker is vital for trimming your list to the most capable options. The best proxy checkers have the ability to verify the speed, anonymity, and reliability of each proxy in real-time. By incorporating these tools into your workflow, you can maintain an up-to-date list of proxies, making sure of maximum efficiency for your web scraping or automation tasks. This comprehensive approach of using scrapers and checkers in the end leads to higher quality data extraction results.

Automating Web Scraping with Proxies

Streamlining web scraping can greatly enhance data collection performance, particularly when combined in conjunction with the right proxy tools. A proxy scraper lets users to obtain a broad array of IP addresses that can effectively mask scraping activities. By utilizing best free proxy checker 2025 , you will rotate IP addresses often enough to elude detection and lessen the likelihood of being shut out by target websites. This is vital for keeping seamless accessibility to data while scraping, ensuring that you can obtain information without interruptions.

Employing a proxy checker is essential in this process to confirm the quality and speed of the proxies being used. A reliable proxy verification tool will help eliminate faulty or slow proxies, permitting you to work with the best proxy sources for web scraping. Whether you are using an HTTP proxy scraper or a SOCKS proxy checker, confirming that your proxies are operating well will result in faster data extraction and a smoother scraping experience. The capability to automate this verification process can save considerable time and energy, enabling you to spend time on analyzing the data rather than overseeing connections.

When it comes to scraping through automation, comprehending the differences between private and public proxies might guide your choice in proxy use. Private proxies often offer higher speeds and increased reliability compared to free public proxies. Still, considering costs against the volume of data required is key. For those interested in scrape proxies for free, using a proxy list generator online might be a good starting point, but it’s advisable to consider premium proxies or tools like ProxyStorm for important projects. Ultimately, the combination of powerful proxy automation and robust checking methods will lead to the optimal results in data extraction tasks.