The Craft of Proxied Scraping: Suggestions for Achieving Success

In the ever-evolving world of data extraction, the significance of using proxy servers cannot be ignored. Whether you are a seasoned web scraper or just new to the field, understanding the subtleties of scraping with proxies and how to employ different kinds of proxies properly can considerably boost your web activities. Proxies make it possible for you to move through the internet in a private manner, avoid internet restrictions, and access geo-restricted content. Nonetheless, to fully leverage these benefits, you must have the appropriate tools and techniques at your disposal.

This article explores the art of proxy scraping, providing you with crucial tips and strategies for thriving. From understanding how to obtain free proxies to understanding the variations between HTTP and SOCKS proxies, we will address a broad spectrum of topics that will prepare you with the insight needed to optimize your proxy operation. We will also explore various utilities for proxy verification, including the best free options for 2025, and instruct you on assess proxy performance and evaluate their anonymity. Whether you are looking for the quickest web scraping tool or the most reliable proxy sources, you'll gain valuable insights here to help you make informed decisions in your web scraping endeavors.

Understanding Proxy Servers

Proxies serve as intermediaries between a individual's device and the internet, enabling users to surf in a discreet manner and retrieve information without revealing their actual identity. When a user sends a request through a proxy, it transmits that request to the intended website on in place of the individual. Thus, the site sees the proxy's IP address instead of the user's, improving confidentiality and security.

There are numerous types of proxies, including Hypertext Transfer Protocol, Socket Secure version 4, and Socket Secure version 5, each having different roles. HTTP proxy servers are mainly utilized for web traffic, while SOCKS4 and SOCKS5 proxies can handle any type of internet traffic, rendering them versatile for different applications. The choice between these proxy servers often is based on the specific needs of the user, such as velocity, disguise, and compatibility with multiple services.

Employing proxies is essential in data extraction, where significant amounts of information are gathered from websites. They assist avoid IP bans and rate limiting, guaranteeing smoother and efficient data extraction operations. Regardless of whether a user chooses free or premium proxy servers, understanding the characteristics and functions of these tools is essential for successful information harvesting and web automation tasks.

Proxied Scraping Methods

When it comes to proxy harvesting, several effective methods can ensure that you obtain top-notch and reliable proxies. One of the most common methods is utilizing data extraction tools specifically designed to extract proxy lists from websites that provide complimentary or paid proxies. Using a fast scraping tool allows you to automate the procedure, which can save you time and improve efficiency. Be sure to set up your scraper correctly to navigate the site's layout and extract only legitimate proxies.

Another crucial aspect is the validation of the proxies you collect. Using a trustworthy verification tool or testing tool will help ensure that the proxies are operational and meet your particular requirements. You want to verify for criteria like performance, privacy, and geographic location, as these factors can significantly impact your web scraping tasks. By filtering out non-functional or lagging proxies early, you can enhance your web scraping efforts.

Finally, grasping the different types of proxies can improve your scraping approach. For instance, differentiating between Hypertext Transfer Protocol, SOCKS4, and SOCKS5 proxies is crucial, as each has its strengths and drawbacks. Hypertext proxies are often better for internet surfing, while SOCKS proxies provide greater flexibility and compatibility for various protocols. Utilizing a mix of private and public proxies can also improve your chances of bypassing limitations and accessing the information you need, making it essential to understand how to balance these types well.

Picking a Suitable Proxy Checker

While selecting a proxy checker, it is important to consider your specific needs and the features offered. Different tools offer varying capabilities, such as options for check for anonymity levels, speed tests, and support for multiple proxy types. Whether you need if you require HTTP or SOCKS proxies, you may seek out tools designed to handle particular protocols effectively. Understanding the distinctions between these protocols is crucial as it may affect the performance and reliability of the web scraping tasks.

Another important aspect to consider is the speed of the proxy checker. A speedy proxy checker can save you valuable time, especially when dealing with large lists of proxies. Look for tools that can perform bulk checks successfully without compromising accuracy. Some of the best free proxy checkers available may also offer premium options that enhance speed and functionality, making it beneficial to explore both categories to find what meets your requirements best.

Lastly, the user interface and ease of use are vital factors when selecting a proxy checker. A simple and intuitive layout allows you to quickly navigate through the tool and utilize its features without a steep learning curve. Ensure the tool you pick provides comprehensive documentation or support options, as this can significantly aid in troubleshooting and enhance your overall experience. By focusing on these key areas, you can find a proxy checker that meets your web scraping needs.

Checking Proxy Speed and Anonymity

As you utilize proxies for data extraction or automating tasks, verifying their speed is important for ensuring efficiency. A fast proxy can greatly reduce data retrieval times, making your extraction tasks more efficient. To verify proxy speed, you can employ specialized tools that assess response times. These tools usually forward requests via the proxy and log how long it takes to receive a response. The proxies with the lowest latencies are often the most effective for high-volume scraping tasks.

Anonymity is yet another key factor to take into account when choosing proxies. Different proxies offer different levels of anonymity, and it is crucial to check how well a proxy can conceal your IP address. You can use online services to see whether a proxy reveals your original IP. Additionally, the level of anonymity can differ between categories of proxies, such as HTTP-based, SOCKS4, and SOCKS5. Grasping these differences and using proper verification tools can help you select proxies that keep your scraping operations remain undetected.

To enhance your chances of finding high-quality proxies, it’s essential to combine speed and anonymity checks. When checking for speed, also examine the proxy's logs to see if it is revealing any identifiable data. This dual testing method allows you to create an effective proxy list for your scraping requirements. Tools like ProxyStorm can aid in this verification process, providing both speed metrics and privacy testing to help improve your proxy choices.

Best Sources for Free Proxies

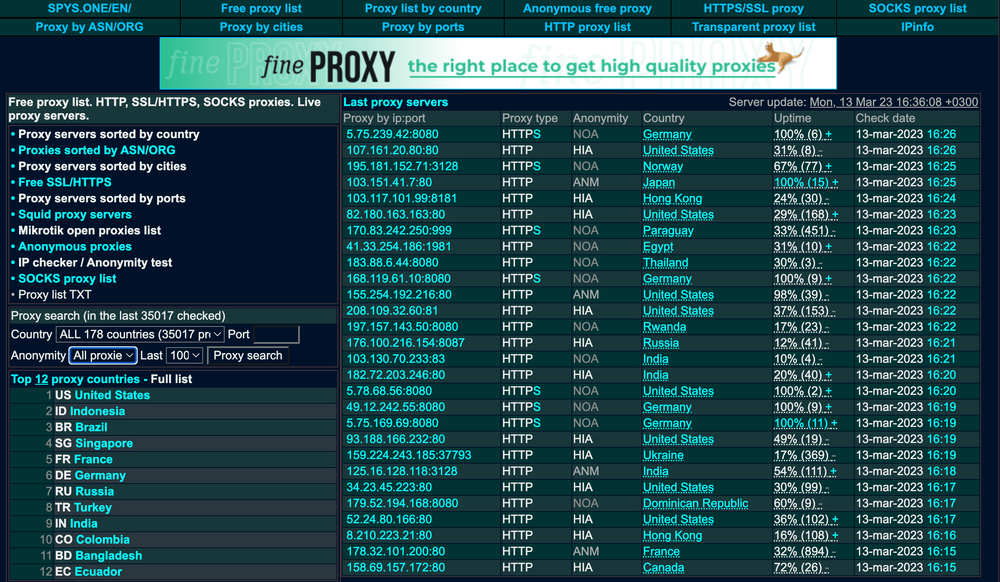

When sourcing free proxies, multiple websites provide compilations of proxy lists that can be invaluable for web scraping and other purposes. Websites like Free Proxy List provide regularly updated listings of SOCKS and HTTP proxies that can help you overcome geographical restrictions and effortlessly scrape data. It's crucial to check the credibility of these sources to ensure you're acquiring reliable proxies.

Online communities and forums are also excellent places to find free proxy sources. Numerous groups dedicated to web scraping and automation post their findings, including fresh links to proxy lists. Sites like discussion boards often contain discussions where users post updated proxies, their reliability data, and tips for credible sources. Engaging with these communities can lead to discovering hidden yet effective proxies.

In conclusion, you might want to use proxy scraping tools that collect free proxies from several websites. Tools like different proxy scraping tools can streamline this process by compiling data from multiple locations, ensuring that you always have access to fresh and fast proxies. Don’t forget to use these tools with a trustworthy proxy checker to confirm their speed and anonymity, creating a powerful setup for your scraping tasks.

# Tools to Proxy Management

Managing proxies properly is crucial for all automation tool. A key tool for this purpose is a trustworthy proxy gathering tool. best proxy tools for data extraction can gather a database of available proxies from multiple sources, enabling users to build up their database of proxies to choose from . Using a no-cost proxy scraper can be an excellent way to begin , notably for beginners who want to delve into web scraping without spending money. Yet, it's crucial to remember that free proxies may have constraints in performance and dependability .

Another , critical component of proxy management is a strong proxy checker . The top proxy checker tools can verify the functionality of your proxies, ensuring they are functioning and operational . This includes checking their privacy levels and performance . A good proxy verification tool will offer comprehensive insights on your proxies, helping you to remove non-working proxies and retain exclusively the highest quality ones for your tasks. Adopting a efficient proxy scraper and checker helps you to enhance your workflow and maintain high performance during web scraping tasks.

In conclusion, consider employing a proxy list compiler online. Such tools often gather proxies from various sources and help users to find high-quality proxies efficiently. They can also separate between dedicated and shared proxies, which is crucial for users needing reliable connections. By integrating tools like ProxyStorm and other reputable services, you can enhance your proxy management workflow efficiently , ensuring that you always have access to the best proxies for your tasks.

Boosting Proxies for Data Extraction

While participating in web scraping, finetuning your proxies is vital for achieving efficiency and reliability. Start by selecting a diverse range of proxies, which include both HTTP and SOCKS types. HTTP proxies are usually adequate for most scraping tasks, but SOCKS5 proxies grant increased versatility, especially when handling more complex protocols. By having a mix of both, you can efficiently handle various sites and their varying security measures.

To maximize performance, utilize a robust proxy checker to check the speed and anonymity of your proxies. This step is crucial in filtering out slow or blocked proxies before the scraping process commences. Tools like ProxyStorm can assist automate the verification process, allowing you to quickly determine which proxies are functional and suitable for your scraping needs. Consistent monitoring and updating of your proxy list will help maintain peak scraping performance.

Finally, weigh the trade-offs between public and private proxies. While free proxies appear appealing, they often come with issues like lower reliability and speed. Investing in private proxies can lead to enhanced performance, especially for high-demand tasks such as data extraction and web automation. Ultimately, identifying high-quality proxies customized to your particular needs can greatly improve your web scraping results and reduce disruptions.